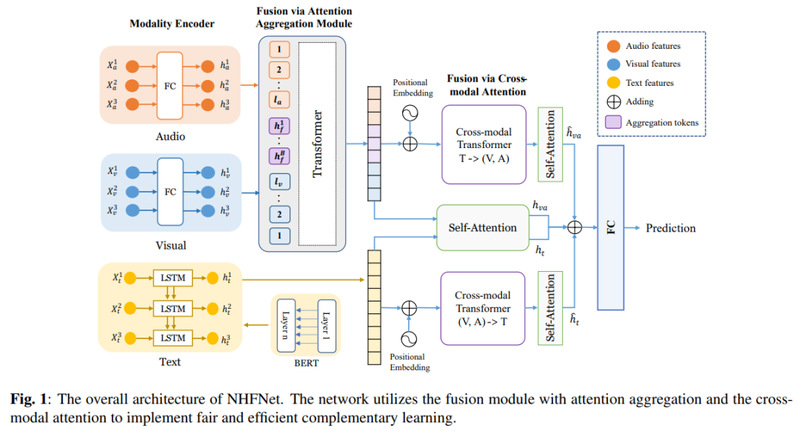

上海对外经贸大学人工智能与变革管理研究院联合研究团队付子旺(硕士生)、许晴(硕士生)、刘峰*、齐佳音*等撰写的论文“NHFNet: A Non-Homogeneous Fusion Network for Multimodal Sentiment Analysis”被中国计算机学会(CCF)推荐B类国际学术会议—2022 IEEE International Conference on Multimedia and Expo(ICME)录用。该研究提出了一种创新的非同阶的多模态融合网络来实现信息密度不同的三种模态的交互,利用带有attention聚合融合模块和跨模态attention融合来实现公平和高效的交互。该网络设计了一种创新的融合策略来实现低阶信号特征的强化,克服了成对注意力的二次复杂度,提高了模态间互补信息整合的能力。研究团队在CMU-MOSEI数据集上分别设定了对齐和非对齐两种实验环境,实验结果表明,NHFNet优于目前最先进的融合策略。

成果摘要

Abstract:

Fusion technology is crucial for multimodal sentiment analysis. Recent attention-based fusion methods demonstrate high performance and strong robustness. However, these approaches ignore the difference in information density among the three modalities, i.e., visual and audio have low-level signal features and conversely text has high-level semantic features. To this end, we propose a non-homogeneous fusion network (NHFNet) to achieve multimodal information interaction. Specifically, a fusion module with attention aggregation is designed to handle the fusion of visual and audio modalities to enhance them to high-level semantic features. Then, cross-modal attention is used to achieve information reinforcement of text modality and audio-visual fusion. NHFNet compensates for the differences in information density of different modalities enabling their fair interaction. To verify the effectiveness of the proposed method, we set up the aligned and unaligned experiments on the CMU-MOSEI dataset, respectively. The experimental results show that the proposed method outperforms the state-of-the-art.